It’s been quite a year. Here’s a grab bag of what stuck in my mind from 2025 and what’s coming next.

Personal Stuff

I started 2025 with a New Year’s resolution to deadlift 300lb. I hit 300 in the middle of the year, upped my resolution to 350lb, but only made it to 310lb. I’d like to hit 350 in 2026, but if I plateau and stay healthy I’m fine with that too.

My big resolution for the new year is to host a gathering at least once a month. Doesn’t have to be anything fancy, just an excuse to see people. My plan to make this happen: even if I’m busy, it’s easy to make a big batch of pasta and have guests bring side dishes and wine.

2025 was the first year I really noticed my dog getting old. He’s 11 and doing well for his age, but I’m more aware that I probably only have a few more years left with him. Retired racing greyhounds make great pets:

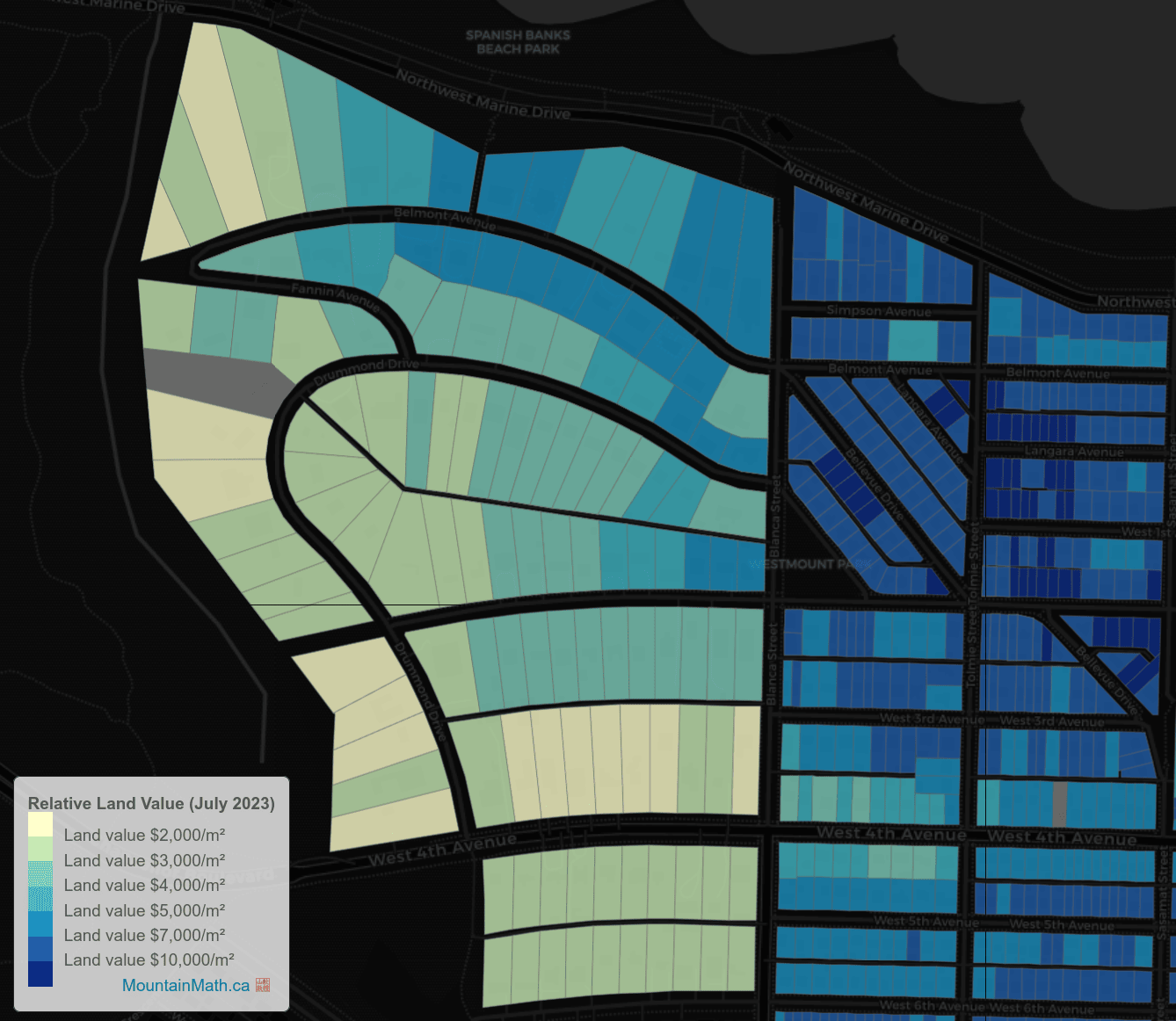

We spent a lot of time in late 2025 looking at real estate. After 7 years we’re a little bored of our current place and it’d be nice to have a bedroom for guests. It’s been an emotional roller-coaster; in November we had an offer accepted for a gorgeous condo downtown, only to back out after the inspection found some issues. But hey, it could be worse - when we bought our current place the market was so hot that people didn’t even get inspections done 😬.

Work Stuff

I got promoted to Staff Engineer in December. This required a ton of effort plus some luck, and I’m very proud of it; feels like I finally made it, y’know? The promotion felt especially good because during the tech job rout of late 2022 I accepted a Staff offer from another mid-sized tech company, and then after 3 weeks of delay they retracted it.

Overall it was a very good year for work. I shipped a product, worked on a high-profile keynote demo with OpenAI, and changed teams to launch a new product that’s attracting a lot of interest. I also flew down to SF twice to give talks for work; here’s one I’m particularly proud of.

Software

It is an incredibly crazy time to be working in software.

It feels like 2025 was the year where agents blew up. 1 year ago, I was occasionally using Aider to make commit-sized changes to software projects, and I felt like I was ahead of the curve. Today I tend to use Claude Code (sometimes Codex CLI) to make more ambitious changes, and they are far more capable of iterating on a change until they get it right.

My day to day now involves less “hands-on” coding and more high-level management of coding agents. It’s become incredibly cheap to try things out, and Opus 4.5 is remarkably capable.

I’m spending a lot of time with these new tools and I still feel quite a bit of FOMO. It helps to know that I’m not the only one.